YAML specification

The YAML specification defines which analysis should be performed by immuneML. It is defined under three main keywords:

definitions- describing the settings ofdatasets,encodings,ml_methods,preprocessing_sequences,reports,simulationsand other components,

instructions- describing the parameters of the analysis that will be performed and which of the analysis components (defined underdefinitions) will be used for this

output- describing how to format the results of the analysis (currently, only HTML output is supported).

The purpose of this page is to list all the YAML specification options. If you are not familiar with the YAML specification and get started, see How to specify an analysis with YAML.

The overall structure of the YAML specification is the following:

definitions: # mandatory keyword

datasets: # mandatory keyword

my_dataset_1: # user-defined name of the dataset

... # see below for the specification of the dataset

encodings: # optional keyword - present if encodings are used

my_encoding_1: # user-defined name of the encoding

... # see below for the specification of different encodings

ml_methods: # optional keyword - present if ML methods are used

my_ml_method_1: # user-defined name of the ML method

ml_method_class_name: # see below for the specification of different ML methods

... # parameters of the method if any (if none are specified, default values are used)

# the parameters model_selection_cv and model_selection_n_folds can be specified for any ML method used and define if there will be

# an internal cross-validation for the given method (if used with TrainMLModel instruction, this will result in the third nested CV, but only over method parameters)

model_selection_cv: False # whether to use cross-validation and random search to estimate the optimal parameters for one split to train/test (True/False)

model_selection_n_folds: -1 # number of folds if cross-validation is used for model selection and optimal parameter estimation

preprocessing_sequences: # optional keyword - present if preprocessing sequences are used

my_preprocessing: # user-defined name of the preprocessing sequence

... # see below for the specification of different preprocessing

reports: # optional keyword - present if reports are used

my_report_1:

... # see below for the specification of different reports

instructions: # mandatory keyword - at least one instruction has to be specified

my_instruction_1: # user-defined name of the instruction

... # see below for the specification of different instructions

output: # how to present the result after running (the only valid option now)

format: HTML

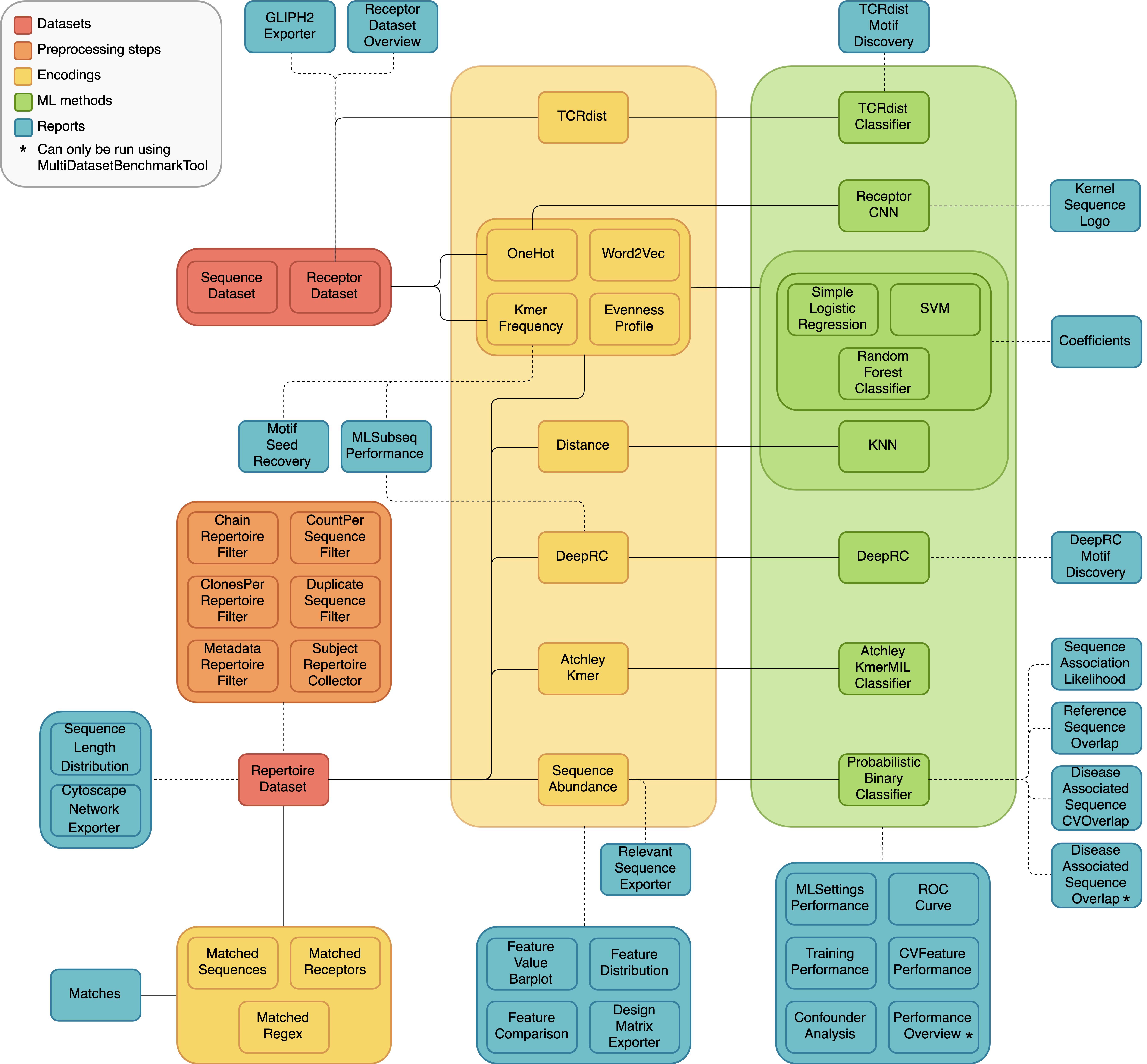

A diagram of the different dataset types, preprocessing steps, encodings, ML methods and reports, and how they can be combined in different analyses is shown below. The solid lines represent components that should be used together, and the dashed lines indicate optional combinations.

Definitions

Datasets

AIRR

Imports data in AIRR format into a Repertoire-, Sequence- or ReceptorDataset. RepertoireDatasets should be used when making predictions per repertoire, such as predicting a disease state. SequenceDatasets or ReceptorDatasets should be used when predicting values for unpaired (single-chain) and paired immune receptors respectively, like antigen specificity.

The AIRR .tsv format is explained here: https://docs.airr-community.org/en/stable/datarep/format.html And the AIRR rearrangement schema can be found here: https://docs.airr-community.org/en/stable/datarep/rearrangements.html

When importing a ReceptorDataset, the AIRR field cell_id is used to determine the chain pairs.

Arguments:

path (str): This is the path to a directory with AIRR files to import. By default path is set to the current working directory.

is_repertoire (bool): If True, this imports a RepertoireDataset. If False, it imports a SequenceDataset or ReceptorDataset. By default, is_repertoire is set to True.

metadata_file (str): Required for RepertoireDatasets. This parameter specifies the path to the metadata file. This is a csv file with columns filename, subject_id and arbitrary other columns which can be used as labels in instructions. Only the AIRR files included under the column ‘filename’ are imported into the RepertoireDataset. For setting SequenceDataset metadata, metadata_file is ignored, see metadata_column_mapping instead.

paired (str): Required for Sequence- or ReceptorDatasets. This parameter determines whether to import a SequenceDataset (paired = False) or a ReceptorDataset (paired = True). In a ReceptorDataset, two sequences with chain types specified by receptor_chains are paired together based on the identifier given in the AIRR column named ‘cell_id’.

receptor_chains (str): Required for ReceptorDatasets. Determines which pair of chains to import for each Receptor. Valid values are TRA_TRB, TRG_TRD, IGH_IGL, IGH_IGK. If receptor_chains is not provided, the chain pair is automatically detected (only one chain pair type allowed per repertoire).

import_productive (bool): Whether productive sequences (with value ‘T’ in column productive) should be included in the imported sequences. By default, import_productive is True.

import_with_stop_codon (bool): Whether sequences with stop codons (with value ‘T’ in column stop_codon) should be included in the imported sequences. This only applies if column stop_codon is present. By default, import_with_stop_codon is False.

import_out_of_frame (bool): Whether out of frame sequences (with value ‘F’ in column vj_in_frame) should be included in the imported sequences. This only applies if column vj_in_frame is present. By default, import_out_of_frame is False.

import_illegal_characters (bool): Whether to import sequences that contain illegal characters, i.e., characters that do not appear in the sequence alphabet (amino acids including stop codon ‘*’, or nucleotides). When set to false, filtering is only applied to the sequence type of interest (when running immuneML in amino acid mode, only entries with illegal characters in the amino acid sequence are removed). By default import_illegal_characters is False.

import_empty_nt_sequences (bool): imports sequences which have an empty nucleotide sequence field; can be True or False. By default, import_empty_nt_sequences is set to True.

import_empty_aa_sequences (bool): imports sequences which have an empty amino acid sequence field; can be True or False; for analysis on amino acid sequences, this parameter should be False (import only non-empty amino acid sequences). By default, import_empty_aa_sequences is set to False.

region_type (str): Which part of the sequence to import. By default, this value is set to IMGT_CDR3. This means the first and last amino acids are removed from the CDR3 sequence, as AIRR uses the IMGT junction. Specifying any other value will result in importing the sequences as they are. Valid values are IMGT_CDR1, IMGT_CDR2, IMGT_CDR3, IMGT_FR1, IMGT_FR2, IMGT_FR3, IMGT_FR4, IMGT_JUNCTION, FULL_SEQUENCE.

column_mapping (dict): A mapping from AIRR column names to immuneML’s internal data representation. For AIRR, this is by default set to:

junction: sequences junction_aa: sequence_aas v_call: v_alleles j_call: j_alleles locus: chains duplicate_count: counts sequence_id: sequence_identifiersA custom column mapping can be specified here if necessary (for example; adding additional data fields if they are present in the AIRR file, or using alternative column names). Valid immuneML fields that can be specified here are [‘sequence_aas’, ‘sequences’, ‘v_genes’, ‘j_genes’, ‘v_subgroups’, ‘j_subgroups’, ‘v_alleles’, ‘j_alleles’, ‘chains’, ‘counts’, ‘frame_types’, ‘sequence_identifiers’, ‘cell_ids’].

column_mapping_synonyms (dict): This is a column mapping that can be used if a column could have alternative names. The formatting is the same as column_mapping. If some columns specified in column_mapping are not found in the file, the columns specified in column_mapping_synonyms are instead attempted to be loaded. For AIRR format, there is no default column_mapping_synonyms.

metadata_column_mapping (dict): Specifies metadata for Sequence- and ReceptorDatasets. This should specify a mapping similar to column_mapping where keys are AIRR column names and values are the names that are internally used in immuneML as metadata fields. These metadata fields can be used as prediction labels for Sequence- and ReceptorDatasets. For AIRR format, there is no default metadata_column_mapping. For setting RepertoireDataset metadata, metadata_column_mapping is ignored, see metadata_file instead.

separator (str): Column separator, for AIRR this is by default “t”.

YAML specification:

my_airr_dataset: format: AIRR params: path: path/to/files/ is_repertoire: True # whether to import a RepertoireDataset metadata_file: path/to/metadata.csv # metadata file for RepertoireDataset metadata_column_mapping: # metadata column mapping AIRR: immuneML for Sequence- or ReceptorDatasetDataset airr_column_name1: metadata_label1 airr_column_name2: metadata_label2 import_productive: True # whether to include productive sequences in the dataset import_with_stop_codon: False # whether to include sequences with stop codon in the dataset import_out_of_frame: False # whether to include out of frame sequences in the dataset import_illegal_characters: False # remove sequences with illegal characters for the sequence_type being used import_empty_nt_sequences: True # keep sequences even if the `sequences` column is empty (provided that other fields are as specified here) import_empty_aa_sequences: False # remove all sequences with empty `sequence_aas` column # Optional fields with AIRR-specific defaults, only change when different behavior is required: separator: "\t" # column separator region_type: IMGT_CDR3 # what part of the sequence to import column_mapping: # column mapping AIRR: immuneML junction: sequences junction_aa: sequence_aas v_call: v_alleles j_call: j_alleles locus: chains duplicate_count: counts sequence_id: sequence_identifiers

Generic

Imports data from any tabular file into a Repertoire-, Sequence- or ReceptorDataset. RepertoireDatasets should be used when making predictions per repertoire, such as predicting a disease state. SequenceDatasets or ReceptorDatasets should be used when predicting values for unpaired (single-chain) and paired immune receptors respectively, like antigen specificity.

This importer works similarly to other importers, but has no predefined default values for which fields are imported, and can therefore be tailored to import data from various different tabular files with headers.

For ReceptorDatasets: this importer assumes the two receptor sequences appear on different lines in the file, and can be paired together by a common sequence identifier. If you instead want to import a ReceptorDataset from a tabular file that contains both receptor chains on one line, see SingleLineReceptor import

Arguments:

path (str): Required parameter. This is the path to a directory with files to import.

is_repertoire (bool): If True, this imports a RepertoireDataset. If False, it imports a SequenceDataset or ReceptorDataset. By default, is_repertoire is set to True.

metadata_file (str): Required for RepertoireDatasets. This parameter specifies the path to the metadata file. This is a csv file with columns filename, subject_id and arbitrary other columns which can be used as labels in instructions. For setting Sequence- or ReceptorDataset metadata, metadata_file is ignored, see metadata_column_mapping instead.

paired (str): Required for Sequence- or ReceptorDatasets. This parameter determines whether to import a SequenceDataset (paired = False) or a ReceptorDataset (paired = True). In a ReceptorDataset, two sequences with chain types specified by receptor_chains are paired together based on a common identifier. This identifier should be mapped to the immuneML field ‘sequence_identifiers’ using the column_mapping.

receptor_chains (str): Required for ReceptorDatasets. Determines which pair of chains to import for each Receptor. Valid values are TRA_TRB, TRG_TRD, IGH_IGL, IGH_IGK.

import_illegal_characters (bool): Whether to import sequences that contain illegal characters, i.e., characters that do not appear in the sequence alphabet (amino acids including stop codon ‘*’, or nucleotides). When set to false, filtering is only applied to the sequence type of interest (when running immuneML in amino acid mode, only entries with illegal characters in the amino acid sequence are removed).

import_empty_nt_sequences (bool): imports sequences which have an empty nucleotide sequence field; can be True or False. By default, import_empty_nt_sequences is set to True.

import_empty_aa_sequences (bool): imports sequences which have an empty amino acid sequence field; can be True or False; for analysis on amino acid sequences, this parameter should be False (import only non-empty amino acid sequences). By default, import_empty_aa_sequences is set to False.

region_type (str): Which part of the sequence to import. When IMGT_CDR3 is specified, immuneML assumes the IMGT junction (including leading C and trailing Y/F amino acids) is used in the input file, and the first and last amino acids will be removed from the sequences to retrieve the IMGT CDR3 sequence. Specifying any other value will result in importing the sequences as they are. Valid values are IMGT_CDR1, IMGT_CDR2, IMGT_CDR3, IMGT_FR1, IMGT_FR2, IMGT_FR3, IMGT_FR4, IMGT_JUNCTION, FULL_SEQUENCE.

column_mapping (dict): Required for all datasets. A mapping where the keys are the column names in the input file, and the values correspond to the names used in immuneML’s internal data representation. Valid immuneML fields that can be specified here are [‘sequence_aas’, ‘sequences’, ‘v_genes’, ‘j_genes’, ‘v_subgroups’, ‘j_subgroups’, ‘v_alleles’, ‘j_alleles’, ‘chains’, ‘counts’, ‘frame_types’, ‘sequence_identifiers’, ‘cell_ids’]. At least sequences (nucleotide) or sequence_aas (amino acids) must be specified, but all other fields are optional. A column mapping can look for example like this:

file_column_amino_acids: sequence_aas file_column_v_genes: v_genes file_column_j_genes: j_genes file_column_frequencies: countscolumn_mapping_synonyms (dict): This is a column mapping that can be used if a column could have alternative names. The formatting is the same as column_mapping. If some columns specified in column_mapping are not found in the file, the columns specified in column_mapping_synonyms are instead attempted to be loaded. For Generic import, there is no default column_mapping_synonyms.

metadata_column_mapping (dict): Optional; specifies metadata for Sequence- and ReceptorDatasets. This is a column mapping that is formatted similarly to column_mapping, but here the values are the names that immuneML internally uses as metadata fields. These fields can subsequently be used as labels in instructions (for example labels that are used for prediction by ML methods). This column mapping could for example look like this:

file_column_antigen_specificity: antigen_specificityThe label antigen_specificity can now be used throughout immuneML. For setting RepertoireDataset metadata, metadata_column_mapping is ignored, see metadata_file instead.

columns_to_load (list): Optional; specifies which columns to load from the input file. This may be useful if the input files contain many unused columns. If no value is specified, all columns are loaded.

separator (str): Required parameter. Column separator, for example “t” or “,”.

YAML specification:

my_generic_dataset: format: Generic params: path: path/to/files/ is_repertoire: True # whether to import a RepertoireDataset metadata_file: path/to/metadata.csv # metadata file for RepertoireDataset paired: False # whether to import SequenceDataset (False) or ReceptorDataset (True) when is_repertoire = False receptor_chains: TRA_TRB # what chain pair to import for a ReceptorDataset separator: "\t" # column separator import_illegal_characters: False # remove sequences with illegal characters for the sequence_type being used import_empty_nt_sequences: True # keep sequences even though the nucleotide sequence might be empty import_empty_aa_sequences: False # filter out sequences if they don't have sequence_aa set region_type: IMGT_CDR3 # what part of the sequence to import column_mapping: # column mapping file: immuneML file_column_amino_acids: sequence_aas file_column_v_genes: v_genes file_column_j_genes: j_genes file_column_frequencies: counts metadata_column_mapping: # metadata column mapping file: immuneML file_column_antigen_specificity: antigen_specificity columns_to_load: # which subset of columns to load from the file - file_column_amino_acids - file_column_v_genes - file_column_j_genes - file_column_frequencies - file_column_antigen_specificity

IGoR

Imports data generated by IGoR simulations into a Repertoire-, or SequenceDataset. RepertoireDatasets should be used when making predictions per repertoire, such as predicting a disease state. SequenceDatasets should be used when predicting values for unpaired (single-chain) immune receptors, like antigen specificity.

Note that you should run IGoR with the –CDR3 option specified, this tool imports the generated CDR3 files. Sequences with missing anchors are not imported, meaning only sequences with value ‘1’ in the anchors_found column are imported. Nucleotide sequences are automatically translated to amino acid sequences.

Reference: Quentin Marcou, Thierry Mora, Aleksandra M. Walczak ‘High-throughput immune repertoire analysis with IGoR’. Nature Communications, (2018) doi.org/10.1038/s41467-018-02832-w.

Arguments:

path (str): This is the path to a directory with IGoR files to import. By default path is set to the current working directory.

is_repertoire (bool): If True, this imports a RepertoireDataset. If False, it imports a SequenceDataset. By default, is_repertoire is set to True.

metadata_file (str): Required for RepertoireDatasets. This parameter specifies the path to the metadata file. This is a csv file with columns filename, subject_id and arbitrary other columns which can be used as labels in instructions. Only the IGoR files included under the column ‘filename’ are imported into the RepertoireDataset. For setting SequenceDataset metadata, metadata_file is ignored, see metadata_column_mapping instead.

import_with_stop_codon (bool): Whether sequences with stop codons should be included in the imported sequences. By default, import_with_stop_codon is False.

import_out_of_frame (bool): Whether out of frame sequences (with value ‘0’ in column is_inframe) should be included in the imported sequences. By default, import_out_of_frame is False.

import_illegal_characters (bool): Whether to import sequences that contain illegal characters, i.e., characters that do not appear in the sequence alphabet (amino acids including stop codon ‘*’, or nucleotides). When set to false, filtering is only applied to the sequence type of interest (when running immuneML in amino acid mode, only entries with illegal characters in the amino acid sequence are removed). By default import_illegal_characters is False.

import_empty_nt_sequences (bool): imports sequences which have an empty nucleotide sequence field; can be True or False. By default, import_empty_nt_sequences is set to True.

region_type (str): Which part of the sequence to import. By default, this value is set to IMGT_CDR3. This means the first and last amino acids are removed from the CDR3 sequence, as IGoR uses the IMGT junction. Specifying any other value will result in importing the sequences as they are. Valid values are IMGT_CDR1, IMGT_CDR2, IMGT_CDR3, IMGT_FR1, IMGT_FR2, IMGT_FR3, IMGT_FR4, IMGT_JUNCTION, FULL_SEQUENCE.

column_mapping (dict): A mapping from IGoR column names to immuneML’s internal data representation. For IGoR, this is by default set to:

nt_CDR3: sequences seq_index: sequence_identifiersA custom column mapping can be specified here if necessary (for example; adding additional data fields if they are present in the IGoR file, or using alternative column names). Valid immuneML fields that can be specified here are [‘sequence_aas’, ‘sequences’, ‘v_genes’, ‘j_genes’, ‘v_subgroups’, ‘j_subgroups’, ‘v_alleles’, ‘j_alleles’, ‘chains’, ‘counts’, ‘frame_types’, ‘sequence_identifiers’, ‘cell_ids’].

column_mapping_synonyms (dict): This is a column mapping that can be used if a column could have alternative names. The formatting is the same as column_mapping. If some columns specified in column_mapping are not found in the file, the columns specified in column_mapping_synonyms are instead attempted to be loaded. For IGoR format, there is no default column_mapping_synonyms.

metadata_column_mapping (dict): Specifies metadata for SequenceDatasets. This should specify a mapping similar to column_mapping where keys are IGoR column names and values are the names that are internally used in immuneML as metadata fields. These metadata fields can be used as prediction labels for SequenceDatasets. For IGoR format, there is no default metadata_column_mapping. For setting RepertoireDataset metadata, metadata_column_mapping is ignored, see metadata_file instead.

separator (str): Column separator, for IGoR this is by default “,”.

YAML specification:

my_igor_dataset: format: IGoR params: path: path/to/files/ is_repertoire: True # whether to import a RepertoireDataset (True) or a SequenceDataset (False) metadata_file: path/to/metadata.csv # metadata file for RepertoireDataset metadata_column_mapping: # metadata column mapping IGoR: immuneML for SequenceDataset igor_column_name1: metadata_label1 igor_column_name2: metadata_label2 import_with_stop_codon: False # whether to include sequences with stop codon in the dataset import_out_of_frame: False # whether to include out of frame sequences in the dataset import_illegal_characters: False # remove sequences with illegal characters for the sequence_type being used import_empty_nt_sequences: True # keep sequences even though the nucleotide sequence might be empty # Optional fields with IGoR-specific defaults, only change when different behavior is required: separator: "," # column separator region_type: IMGT_CDR3 # what part of the sequence to import column_mapping: # column mapping IGoR: immuneML nt_CDR3: sequences seq_index: sequence_identifiers

IReceptor

Imports AIRR datasets retrieved through the iReceptor Gateway into a Repertoire-, Sequence- or ReceptorDataset. The differences between this importer and the AIRR importer are:

This importer takes in a list of .zip files, which must contain one or more AIRR tsv files, and for each AIRR file, a corresponding metadata json file must be present.

This importer does not require a metadata csv file for RepertoireDataset import, it is generated automatically from the metadata json files.

RepertoireDatasets should be used when making predictions per repertoire, such as predicting a disease state. SequenceDatasets or ReceptorDatasets should be used when predicting values for unpaired (single-chain) and paired immune receptors respectively, like antigen specificity.

AIRR rearrangement schema can be found here: https://docs.airr-community.org/en/stable/datarep/rearrangements.html

When importing a ReceptorDataset, the AIRR field cell_id is used to determine the chain pairs.

Arguments:

path (str): This is the path to a directory with .zip files retrieved from the iReceptor Gateway. These .zip files should include AIRR files (with .tsv extension) and corresponding metadata.json files with matching names (e.g., for my_dataset.tsv the corresponding metadata file is called my_dataset-metadata.json). The zip files must use the .zip extension.

is_repertoire (bool): If True, this imports a RepertoireDataset. If False, it imports a SequenceDataset or ReceptorDataset. By default, is_repertoire is set to True.

paired (str): Required for Sequence- or ReceptorDatasets. This parameter determines whether to import a SequenceDataset (paired = False) or a ReceptorDataset (paired = True). In a ReceptorDataset, two sequences with chain types specified by receptor_chains are paired together based on the identifier given in the AIRR column named ‘cell_id’.

receptor_chains (str): Required for ReceptorDatasets. Determines which pair of chains to import for each Receptor. Valid values are TRA_TRB, TRG_TRD, IGH_IGL, IGH_IGK. If receptor_chains is not provided, the chain pair is automatically detected (only one chain pair type allowed per repertoire).

import_productive (bool): Whether productive sequences (with value ‘T’ in column productive) should be included in the imported sequences. By default, import_productive is True.

import_with_stop_codon (bool): Whether sequences with stop codons (with value ‘T’ in column stop_codon) should be included in the imported sequences. This only applies if column stop_codon is present. By default, import_with_stop_codon is False.

import_out_of_frame (bool): Whether out of frame sequences (with value ‘F’ in column vj_in_frame) should be included in the imported sequences. This only applies if column vj_in_frame is present. By default, import_out_of_frame is False.

import_illegal_characters (bool): Whether to import sequences that contain illegal characters, i.e., characters that do not appear in the sequence alphabet (amino acids including stop codon ‘*’, or nucleotides). When set to false, filtering is only applied to the sequence type of interest (when running immuneML in amino acid mode, only entries with illegal characters in the amino acid sequence are removed). By default import_illegal_characters is False.

import_empty_nt_sequences (bool): imports sequences which have an empty nucleotide sequence field; can be True or False. By default, import_empty_nt_sequences is set to True.

import_empty_aa_sequences (bool): imports sequences which have an empty amino acid sequence field; can be True or False; for analysis on amino acid sequences, this parameter should be False (import only non-empty amino acid sequences). By default, import_empty_aa_sequences is set to False.

region_type (str): Which part of the sequence to import. By default, this value is set to IMGT_CDR3. This means the first and last amino acids are removed from the CDR3 sequence, as AIRR uses the IMGT junction. Specifying any other value will result in importing the sequences as they are. Valid values are IMGT_CDR1, IMGT_CDR2, IMGT_CDR3, IMGT_FR1, IMGT_FR2, IMGT_FR3, IMGT_FR4, IMGT_JUNCTION, FULL_SEQUENCE.

column_mapping (dict): A mapping from AIRR column names to immuneML’s internal data representation. For AIRR, this is by default set to:

junction: sequences junction_aa: sequence_aas v_call: v_alleles j_call: j_alleles locus: chains duplicate_count: counts sequence_id: sequence_identifiersA custom column mapping can be specified here if necessary (for example; adding additional data fields if they are present in the AIRR file, or using alternative column names). Valid immuneML fields that can be specified here are [‘sequence_aas’, ‘sequences’, ‘v_genes’, ‘j_genes’, ‘v_subgroups’, ‘j_subgroups’, ‘v_alleles’, ‘j_alleles’, ‘chains’, ‘counts’, ‘frame_types’, ‘sequence_identifiers’, ‘cell_ids’].

column_mapping_synonyms (dict): This is a column mapping that can be used if a column could have alternative names. The formatting is the same as column_mapping. If some columns specified in column_mapping are not found in the file, the columns specified in column_mapping_synonyms are instead attempted to be loaded. For AIRR format, there is no default column_mapping_synonyms.

metadata_column_mapping (dict): Specifies metadata for Sequence- and ReceptorDatasets. This should specify a mapping similar to column_mapping where keys are AIRR column names and values are the names that are internally used in immuneML as metadata fields. These metadata fields can be used as prediction labels for Sequence- and ReceptorDatasets. For AIRR format, there is no default metadata_column_mapping. When importing a RepertoireDataset, the metadata is automatically extracted from the metadata json files.

separator (str): Column separator, for AIRR this is by default “t”.

YAML specification:

my_airr_dataset: format: IReceptor params: path: path/to/zipfiles/ is_repertoire: True # whether to import a RepertoireDataset metadata_column_mapping: # metadata column mapping AIRR: immuneML for Sequence- or ReceptorDatasetDataset airr_column_name1: metadata_label1 airr_column_name2: metadata_label2 import_productive: True # whether to include productive sequences in the dataset import_with_stop_codon: False # whether to include sequences with stop codon in the dataset import_out_of_frame: False # whether to include out of frame sequences in the dataset import_illegal_characters: False # remove sequences with illegal characters for the sequence_type being used import_empty_nt_sequences: True # keep sequences even if the `sequences` column is empty (provided that other fields are as specified here) import_empty_aa_sequences: False # remove all sequences with empty `sequence_aas` column # Optional fields with AIRR-specific defaults, only change when different behavior is required: separator: "\t" # column separator region_type: IMGT_CDR3 # what part of the sequence to import column_mapping: # column mapping AIRR: immuneML junction: sequences junction_aa: sequence_aas v_call: v_alleles j_call: j_alleles locus: chains duplicate_count: counts sequence_id: sequence_identifiers

ImmuneML

Imports the dataset from the files previously exported by immuneML. It closely resembles AIRR format but relies on binary representations and is optimized for faster read-in at runtime.

ImmuneMLImport can import any kind of dataset (RepertoireDataset, SequenceDataset, ReceptorDataset).

This format includes:

a dataset file in yaml format with iml_dataset extension with parameters:

name,

identifier,

metadata_file (for repertoire datasets),

metadata_fields (for repertoire datasets),

repertoire_ids (for repertoire datasets)

element_ids (for receptor and sequence datasets),

labels,

a csv metadata file (only for repertoire datasets, should be in the same folder as the iml_dataset file),

3. data files for different types of data. For repertoire datasets, data files include one binary numpy file per repertoire with sequences and associated information and one metadata yaml file per repertoire with details such as repertoire identifier, disease status, subject id and other similar available information. For sequence and receptor datasets, sequences or receptors respectively, are stored in batches in binary numpy files.

Arguments:

path (str): The path to the previously created dataset file. This file should have an ‘.iml_dataset’ extension. If the path has not been specified, immuneML attempts to load the dataset from a specified metadata file (only for RepertoireDatasets).

metadata_file (str): An optional metadata file for a RepertoireDataset. If specified, the RepertoireDataset metadata will be updated to the newly specified metadata without otherwise changing the Repertoire objects

YAML specification:

my_dataset: format: ImmuneML params: path: path/to/dataset.iml_dataset metadata_file: path/to/metadata.csv

ImmunoSEQRearrangement

Imports data from Adaptive Biotechnologies immunoSEQ Analyzer rearrangement-level .tsv files into a Repertoire-, or SequenceDataset. RepertoireDatasets should be used when making predictions per repertoire, such as predicting a disease state. SequenceDatasets should be used when predicting values for unpaired (single-chain) immune receptors, like antigen specificity.

The format of the files imported by this importer is described here: https://www.adaptivebiotech.com/wp-content/uploads/2019/07/MRK-00342_immunoSEQ_TechNote_DataExport_WEB_REV.pdf Alternatively, to import sample-level .tsv files, see ImmunoSEQSample import

The only difference between these two importers is which columns they load from the .tsv files.

Arguments:

path (str): This is the path to a directory with files to import. By default path is set to the current working directory.

is_repertoire (bool): If True, this imports a RepertoireDataset. If False, it imports a SequenceDataset. By default, is_repertoire is set to True.

metadata_file (str): Required for RepertoireDatasets. This parameter specifies the path to the metadata file. This is a csv file with columns filename, subject_id and arbitrary other columns which can be used as labels in instructions. Only the files included under the column ‘filename’ are imported into the RepertoireDataset. For setting SequenceDataset metadata, metadata_file is ignored, see metadata_column_mapping instead.

import_productive (bool): Whether productive sequences (with value ‘In’ in column frame_type) should be included in the imported sequences. By default, import_productive is True.

import_with_stop_codon (bool): Whether sequences with stop codons (with value ‘Stop’ in column frame_type) should be included in the imported sequences. By default, import_with_stop_codon is False.

import_out_of_frame (bool): Whether out of frame sequences (with value ‘Out’ in column frame_type) should be included in the imported sequences. By default, import_out_of_frame is False.

import_illegal_characters (bool): Whether to import sequences that contain illegal characters, i.e., characters that do not appear in the sequence alphabet (amino acids including stop codon ‘*’, or nucleotides). When set to false, filtering is only applied to the sequence type of interest (when running immuneML in amino acid mode, only entries with illegal characters in the amino acid sequence are removed). By default import_illegal_characters is False.

import_empty_nt_sequences (bool): imports sequences which have an empty nucleotide sequence field; can be True or False. By default, import_empty_nt_sequences is set to True.

import_empty_aa_sequences (bool): imports sequences which have an empty amino acid sequence field; can be True or False; for analysis on amino acid sequences, this parameter should be False (import only non-empty amino acid sequences). By default, import_empty_aa_sequences is set to False.

region_type (str): Which part of the sequence to import. By default, this value is set to IMGT_CDR3. This means the first and last amino acids are removed from the CDR3 sequence, as immunoSEQ files use the IMGT junction. Specifying any other value will result in importing the sequences as they are. Valid values are IMGT_CDR1, IMGT_CDR2, IMGT_CDR3, IMGT_FR1, IMGT_FR2, IMGT_FR3, IMGT_FR4, IMGT_JUNCTION, FULL_SEQUENCE.

column_mapping (dict): A mapping from immunoSEQ column names to immuneML’s internal data representation. For immunoSEQ rearrangement-level files, this is by default set to:

rearrangement: sequences amino_acid: sequence_aas v_gene: v_genes j_gene: j_genes frame_type: frame_types v_family: v_subgroups j_family: j_subgroups v_allele: v_alleles j_allele: j_alleles templates: counts locus: chainsA custom column mapping can be specified here if necessary (for example; adding additional data fields if they are present in the file, or using alternative column names). Valid immuneML fields that can be specified here are [‘sequence_aas’, ‘sequences’, ‘v_genes’, ‘j_genes’, ‘v_subgroups’, ‘j_subgroups’, ‘v_alleles’, ‘j_alleles’, ‘chains’, ‘counts’, ‘frame_types’, ‘sequence_identifiers’, ‘cell_ids’].

column_mapping_synonyms (dict): This is a column mapping that can be used if a column could have alternative names. The formatting is the same as column_mapping. If some columns specified in column_mapping are not found in the file, the columns specified in column_mapping_synonyms are instead attempted to be loaded. For immunoSEQ rearrangement-level files, this is by default set to:

v_resolved: v_alleles j_resolved: j_allelescolumns_to_load (list): Specifies which subset of columns must be loaded from the file. By default, this is: [rearrangement, v_family, v_gene, v_allele, j_family, j_gene, j_allele, amino_acid, templates, frame_type, locus]

metadata_column_mapping (dict): Specifies metadata for SequenceDatasets. This should specify a mapping similar to column_mapping where keys are immunoSEQ column names and values are the names that are internally used in immuneML as metadata fields. These metadata fields can be used as prediction labels for SequenceDatasets. For immunoSEQ rearrangement .tsv files, there is no default metadata_column_mapping. For setting RepertoireDataset metadata, metadata_column_mapping is ignored, see metadata_file instead.

separator (str): Column separator, for ImmunoSEQ files this is by default “t”.

import_empty_nt_sequences (bool): imports sequences which have an empty nucleotide sequence field; can be True or False

import_empty_aa_sequences (bool): imports sequences which have an empty amino acid sequence field; can be True or False; for analysis on amino acid sequences, this parameter will typically be False (import only non-empty amino acid sequences)

YAML specification:

my_immunoseq_dataset: format: ImmunoSEQRearrangement params: path: path/to/files/ is_repertoire: True # whether to import a RepertoireDataset (True) or a SequenceDataset (False) metadata_file: path/to/metadata.csv # metadata file for RepertoireDataset metadata_column_mapping: # metadata column mapping ImmunoSEQ: immuneML for SequenceDataset immunoseq_column_name1: metadata_label1 immunoseq_column_name2: metadata_label2 import_productive: True # whether to include productive sequences in the dataset import_with_stop_codon: False # whether to include sequences with stop codon in the dataset import_out_of_frame: False # whether to include out of frame sequences in the dataset import_illegal_characters: False # remove sequences with illegal characters for the sequence_type being used import_empty_nt_sequences: True # keep sequences even though the nucleotide sequence might be empty import_empty_aa_sequences: False # filter out sequences if they don't have sequence_aa set # Optional fields with ImmunoSEQ rearrangement-specific defaults, only change when different behavior is required: separator: "\t" # column separator columns_to_load: # subset of columns to load - rearrangement - v_family - v_gene - v_allele - j_family - j_gene - j_allele - amino_acid - templates - frame_type - locus region_type: IMGT_CDR3 # what part of the sequence to import column_mapping: # column mapping immunoSEQ: immuneML rearrangement: sequences amino_acid: sequence_aas v_gene: v_genes j_gene: j_genes frame_type: frame_types v_family: v_subgroups j_family: j_subgroups v_allele: v_alleles j_allele: j_alleles templates: counts locus: chains

ImmunoSEQSample

Imports data from Adaptive Biotechnologies immunoSEQ Analyzer sample-level .tsv files into a Repertoire-, or SequenceDataset. RepertoireDatasets should be used when making predictions per repertoire, such as predicting a disease state. SequenceDatasets should be used when predicting values for unpaired (single-chain) immune receptors, like antigen specificity.

The format of the files imported by this importer is described here in section 3.4.13 https://clients.adaptivebiotech.com/assets/downloads/immunoSEQ_AnalyzerManual.pdf Alternatively, to import rearrangement-level .tsv files, see ImmunoSEQRearrangement import. The only difference between these two importers is which columns they load from the .tsv files.

Arguments:

path (str): This is the path to a directory with files to import. By default path is set to the current working directory.

is_repertoire (bool): If True, this imports a RepertoireDataset. If False, it imports a SequenceDataset. By default, is_repertoire is set to True.

metadata_file (str): Required for RepertoireDatasets. This parameter specifies the path to the metadata file. This is a csv file with columns filename, subject_id and arbitrary other columns which can be used as labels in instructions. Only the files included under the column ‘filename’ are imported into the RepertoireDataset. For setting SequenceDataset metadata, metadata_file is ignored, see metadata_column_mapping instead.

import_productive (bool): Whether productive sequences (with value ‘In’ in column frame_type) should be included in the imported sequences. By default, import_productive is True.

import_with_stop_codon (bool): Whether sequences with stop codons (with value ‘Stop’ in column frame_type) should be included in the imported sequences. By default, import_with_stop_codon is False.

import_out_of_frame (bool): Whether out of frame sequences (with value ‘Out’ in column frame_type) should be included in the imported sequences. By default, import_out_of_frame is False.

import_illegal_characters (bool): Whether to import sequences that contain illegal characters, i.e., characters that do not appear in the sequence alphabet (amino acids including stop codon ‘*’, or nucleotides). When set to false, filtering is only applied to the sequence type of interest (when running immuneML in amino acid mode, only entries with illegal characters in the amino acid sequence are removed). By default import_illegal_characters is False.

import_empty_nt_sequences (bool): imports sequences which have an empty nucleotide sequence field; can be True or False. By default, import_empty_nt_sequences is set to True.

import_empty_aa_sequences (bool): imports sequences which have an empty amino acid sequence field; can be True or False; for analysis on amino acid sequences, this parameter should be False (import only non-empty amino acid sequences). By default, import_empty_aa_sequences is set to False.

region_type (str): Which part of the sequence to import. By default, this value is set to IMGT_CDR3. This means the first and last amino acids are removed from the CDR3 sequence, as immunoSEQ files use the IMGT junction. Specifying any other value will result in importing the sequences as they are. Valid values are IMGT_CDR1, IMGT_CDR2, IMGT_CDR3, IMGT_FR1, IMGT_FR2, IMGT_FR3, IMGT_FR4, IMGT_JUNCTION, FULL_SEQUENCE.

column_mapping (dict): A mapping from immunoSEQ column names to immuneML’s internal data representation. For immunoSEQ sample-level files, this is by default set to:

nucleotide: sequences aminoAcid: sequence_aas vGeneName: v_genes jGeneName: j_genes sequenceStatus: frame_types vFamilyName: v_subgroups jFamilyName: j_subgroups vGeneAllele: v_alleles jGeneAllele: j_alleles count (templates/reads): countsA custom column mapping can be specified here if necessary (for example; adding additional data fields if they are present in the file, or using alternative column names). Valid immuneML fields that can be specified here are [‘sequence_aas’, ‘sequences’, ‘v_genes’, ‘j_genes’, ‘v_subgroups’, ‘j_subgroups’, ‘v_alleles’, ‘j_alleles’, ‘chains’, ‘counts’, ‘frame_types’, ‘sequence_identifiers’, ‘cell_ids’].

column_mapping_synonyms (dict): This is a column mapping that can be used if a column could have alternative names. The formatting is the same as column_mapping. If some columns specified in column_mapping are not found in the file, the columns specified in column_mapping_synonyms are instead attempted to be loaded. For immunoSEQ sample .tsv files, there is no default column_mapping_synonyms.

columns_to_load (list): Specifies which subset of columns must be loaded from the file. By default, this is: [nucleotide, aminoAcid, count (templates/reads), vFamilyName, vGeneName, vGeneAllele, jFamilyName, jGeneName, jGeneAllele, sequenceStatus]; these are the columns from the original file that will be imported

metadata_column_mapping (dict): Specifies metadata for SequenceDatasets. This should specify a mapping similar to column_mapping where keys are immunoSEQ column names and values are the names that are internally used in immuneML as metadata fields. These metadata fields can be used as prediction labels for SequenceDatasets. For immunoSEQ sample .tsv files, there is no default metadata_column_mapping. For setting RepertoireDataset metadata, metadata_column_mapping is ignored, see metadata_file instead.

separator (str): Column separator, for ImmunoSEQ files this is by default “t”.

YAML specification:

my_immunoseq_dataset: format: ImmunoSEQSample params: path: path/to/files/ is_repertoire: True # whether to import a RepertoireDataset (True) or a SequenceDataset (False) metadata_file: path/to/metadata.csv # metadata file for RepertoireDataset metadata_column_mapping: # metadata column mapping ImmunoSEQ: immuneML for SequenceDataset immunoseq_column_name1: metadata_label1 immunoseq_column_name2: metadata_label2 import_productive: True # whether to include productive sequences in the dataset import_with_stop_codon: False # whether to include sequences with stop codon in the dataset import_out_of_frame: False # whether to include out of frame sequences in the dataset import_illegal_characters: False # remove sequences with illegal characters for the sequence_type being used import_empty_nt_sequences: True # keep sequences even though the nucleotide sequence might be empty import_empty_aa_sequences: False # filter out sequences if they don't have sequence_aa set # Optional fields with ImmunoSEQ sample-specific defaults, only change when different behavior is required: separator: "\t" # column separator columns_to_load: # subset of columns to load - nucleotide - aminoAcid - count (templates/reads) - vFamilyName - vGeneName - vGeneAllele - jFamilyName - jGeneName - jGeneAllele - sequenceStatus region_type: IMGT_CDR3 # what part of the sequence to import column_mapping: # column mapping immunoSEQ: immuneML nucleotide: sequences aminoAcid: sequence_aas vGeneName: v_genes jGeneName: j_genes sequenceStatus: frame_types vFamilyName: v_subgroups jFamilyName: j_subgroups vGeneAllele: v_alleles jGeneAllele: j_alleles count (templates/reads): counts

MiXCR

Imports data in MiXCR format into a Repertoire-, or SequenceDataset. RepertoireDatasets should be used when making predictions per repertoire, such as predicting a disease state. SequenceDatasets should be used when predicting values for unpaired (single-chain) immune receptors, like antigen specificity.

Arguments:

path (str): This is the path to a directory with MiXCR files to import. By default path is set to the current working directory.

is_repertoire (bool): If True, this imports a RepertoireDataset. If False, it imports a SequenceDataset. By default, is_repertoire is set to True.

metadata_file (str): Required for RepertoireDatasets. This parameter specifies the path to the metadata file. This is a csv file with columns filename, subject_id and arbitrary other columns which can be used as labels in instructions. Only the MiXCR files included under the column ‘filename’ are imported into the RepertoireDataset. For setting SequenceDataset metadata, metadata_file is ignored, see metadata_column_mapping instead.

import_illegal_characters (bool): Whether to import sequences that contain illegal characters, i.e., characters that do not appear in the sequence alphabet (amino acids including stop codon ‘*’, or nucleotides). When set to false, filtering is only applied to the sequence type of interest (when running immuneML in amino acid mode, only entries with illegal characters in the amino acid sequence, such as ‘_’, are removed). By default import_illegal_characters is False.

import_empty_nt_sequences (bool): imports sequences which have an empty nucleotide sequence field; can be True or False. By default, import_empty_nt_sequences is set to True.

import_empty_aa_sequences (bool): imports sequences which have an empty amino acid sequence field; can be True or False; for analysis on amino acid sequences, this parameter should be False (import only non-empty amino acid sequences). By default, import_empty_aa_sequences is set to False.

region_type (str): Which part of the sequence to import. By default, this value is set to IMGT_CDR3. This means the first and last amino acids are removed from the CDR3 sequence, as MiXCR uses IMGT junction as CDR3. Alternatively to importing the CDR3 sequence, other region types can be specified here as well. Valid values are IMGT_CDR3, IMGT_CDR1, IMGT_CDR2, IMGT_FR1, IMGT_FR2, IMGT_FR3, IMGT_FR4.

column_mapping (dict): A mapping from MiXCR column names to immuneML’s internal data representation. For MiXCR, this is by default set to:

cloneCount: counts allVHitsWithScore: v_alleles allJHitsWithScore: j_allelesThe columns that specify the sequences to import are handled by the region_type parameter. A custom column mapping can be specified here if necessary (for example; adding additional data fields if they are present in the MiXCR file, or using alternative column names). Valid immuneML fields that can be specified here are [‘sequence_aas’, ‘sequences’, ‘v_genes’, ‘j_genes’, ‘v_subgroups’, ‘j_subgroups’, ‘v_alleles’, ‘j_alleles’, ‘chains’, ‘counts’, ‘frame_types’, ‘sequence_identifiers’, ‘cell_ids’].

column_mapping_synonyms (dict): This is a column mapping that can be used if a column could have alternative names. The formatting is the same as column_mapping. If some columns specified in column_mapping are not found in the file, the columns specified in column_mapping_synonyms are instead attempted to be loaded. For MiXCR format, there is no default column_mapping_synonyms.

columns_to_load (list): Specifies which subset of columns must be loaded from the MiXCR file. By default, this is: [cloneCount, allVHitsWithScore, allJHitsWithScore, aaSeqCDR3, nSeqCDR3]

metadata_column_mapping (dict): Specifies metadata for SequenceDatasets. This should specify a mapping similar to column_mapping where keys are MiXCR column names and values are the names that are internally used in immuneML as metadata fields. These metadata fields can be used as prediction labels for SequenceDatasets. For MiXCR format, there is no default metadata_column_mapping. For setting RepertoireDataset metadata, metadata_column_mapping is ignored, see metadata_file instead.

separator (str): Column separator, for MiXCR this is by default “t”.

YAML specification:

my_mixcr_dataset: format: MiXCR params: path: path/to/files/ is_repertoire: True # whether to import a RepertoireDataset (True) or a SequenceDataset (False) metadata_file: path/to/metadata.csv # metadata file for RepertoireDataset metadata_column_mapping: # metadata column mapping MiXCR: immuneML for SequenceDataset mixcrColumnName1: metadata_label1 mixcrColumnName2: metadata_label2 region_type: IMGT_CDR3 # what part of the sequence to import import_illegal_characters: False # remove sequences with illegal characters for the sequence_type being used import_empty_nt_sequences: True # keep sequences even though the nucleotide sequence might be empty import_empty_aa_sequences: False # filter out sequences if they don't have sequence_aa set # Optional fields with MiXCR-specific defaults, only change when different behavior is required: separator: "\t" # column separator columns_to_load: # subset of columns to load, sequence columns are handled by region_type parameter - cloneCount - allVHitsWithScore - allJHitsWithScore - aaSeqCDR3 - nSeqCDR3 column_mapping: # column mapping MiXCR: immuneML cloneCount: counts allVHitsWithScore: v_genes allJHitsWithScore: j_genes

OLGA

Imports data generated by OLGA simulations into a Repertoire-, or SequenceDataset. Assumes that the columns in each file correspond to: nucleotide sequences, amino acid sequences, v genes, j genes

Reference: Sethna, Zachary et al. ‘High-throughput immune repertoire analysis with IGoR’. Bioinformatics, (2019) doi.org/10.1093/bioinformatics/btz035.

Arguments:

path (str): This is the path to a directory with OLGA files to import. By default path is set to the current working directory.

is_repertoire (bool): If True, this imports a RepertoireDataset. If False, it imports a SequenceDataset. By default, is_repertoire is set to True.

metadata_file (str): Required for RepertoireDatasets. This parameter specifies the path to the metadata file. This is a csv file with columns filename, subject_id and arbitrary other columns which can be used as labels in instructions. Only the OLGA files included under the column ‘filename’ are imported into the RepertoireDataset. SequenceDataset metadata is currently not supported.

import_illegal_characters (bool): Whether to import sequences that contain illegal characters, i.e., characters that do not appear in the sequence alphabet (amino acids including stop codon ‘*’, or nucleotides). When set to false, filtering is only applied to the sequence type of interest (when running immuneML in amino acid mode, only entries with illegal characters in the amino acid sequence are removed). By default import_illegal_characters is False.

import_empty_nt_sequences (bool): imports sequences which have an empty nucleotide sequence field; can be True or False. By default, import_empty_nt_sequences is set to True.

import_empty_aa_sequences (bool): imports sequences which have an empty amino acid sequence field; can be True or False; for analysis on amino acid sequences, this parameter should be False (import only non-empty amino acid sequences). By default, import_empty_aa_sequences is set to False.

region_type (str): Which part of the sequence to import. By default, this value is set to IMGT_CDR3. This means the first and last amino acids are removed from the CDR3 sequence, as OLGA uses the IMGT junction. Specifying any other value will result in importing the sequences as they are. Valid values are IMGT_CDR1, IMGT_CDR2, IMGT_CDR3, IMGT_FR1, IMGT_FR2, IMGT_FR3, IMGT_FR4, IMGT_JUNCTION, FULL_SEQUENCE.

separator (str): Column separator, for OLGA this is by default “t”.

YAML specification:

my_olga_dataset: format: OLGA params: path: path/to/files/ is_repertoire: True # whether to import a RepertoireDataset (True) or a SequenceDataset (False) metadata_file: path/to/metadata.csv # metadata file for RepertoireDataset import_illegal_characters: False # remove sequences with illegal characters for the sequence_type being used import_empty_nt_sequences: True # keep sequences even though the nucleotide sequence might be empty import_empty_aa_sequences: False # filter out sequences if they don't have sequence_aa set # Optional fields with OLGA-specific defaults, only change when different behavior is required: separator: "\t" # column separator region_type: IMGT_CDR3 # what part of the sequence to import

RandomReceptorDataset

Returns a ReceptorDataset consisting of randomly generated sequences, which can be used for benchmarking purposes. The sequences consist of uniformly chosen amino acids or nucleotides.

Arguments:

receptor_count (int): The number of receptors the ReceptorDataset should contain.

chain_1_length_probabilities (dict): A mapping where the keys correspond to different sequence lengths for chain 1, and the values are the probabilities for choosing each sequence length. For example, to create a random ReceptorDataset where 40% of the sequences for chain 1 would be of length 10, and 60% of the sequences would have length 12, this mapping would need to be specified:

10: 0.4 12: 0.6chain_2_length_probabilities (dict): Same as chain_1_length_probabilities, but for chain 2.

labels (dict): A mapping that specifies randomly chosen labels to be assigned to the receptors. One or multiple labels can be specified here. The keys of this mapping are the labels, and the values consist of another mapping between label classes and their probabilities. For example, to create a random ReceptorDataset with the label cmv_epitope where 70% of the receptors has class binding and the remaining 30% has class not_binding, the following mapping should be specified:

cmv_epitope: binding: 0.7 not_binding: 0.3YAML specification:

my_random_dataset: format: RandomReceptorDataset params: receptor_count: 100 # number of random receptors to generate chain_1_length_probabilities: 14: 0.8 # 80% of all generated sequences for all receptors (for chain 1) will have length 14 15: 0.2 # 20% of all generated sequences across all receptors (for chain 1) will have length 15 chain_2_length_probabilities: 14: 0.8 # 80% of all generated sequences for all receptors (for chain 2) will have length 14 15: 0.2 # 20% of all generated sequences across all receptors (for chain 2) will have length 15 labels: epitope1: # label name True: 0.5 # 50% of the receptors will have class True False: 0.5 # 50% of the receptors will have class False epitope2: # next label with classes that will be assigned to receptors independently of the previous label or other parameters 1: 0.3 # 30% of the generated receptors will have class 1 0: 0.7 # 70% of the generated receptors will have class 0

RandomRepertoireDataset

Returns a RepertoireDataset consisting of randomly generated sequences, which can be used for benchmarking purposes. The sequences consist of uniformly chosen amino acids or nucleotides.

Arguments:

repertoire_count (int): The number of repertoires the RepertoireDataset should contain.

sequence_count_probabilities (dict): A mapping where the keys are the number of sequences per repertoire, and the values are the probabilities that any of the repertoires would have that number of sequences. For example, to create a random RepertoireDataset where 40% of the repertoires would have 1000 sequences, and the other 60% would have 1100 sequences, this mapping would need to be specified:

1000: 0.4 1100: 0.6sequence_length_probabilities (dict): A mapping where the keys correspond to different sequence lengths, and the values are the probabilities for choosing each sequence length. For example, to create a random RepertoireDataset where 40% of the sequences would be of length 10, and 60% of the sequences would have length 12, this mapping would need to be specified:

10: 0.4 12: 0.6labels (dict): A mapping that specifies randomly chosen labels to be assigned to the Repertoires. One or multiple labels can be specified here. The keys of this mapping are the labels, and the values consist of another mapping between label classes and their probabilities. For example, to create a random RepertoireDataset with the label CMV where 70% of the Repertoires has class cmv_positive and the remaining 30% has class cmv_negative, the following mapping should be specified:

CMV: cmv_positive: 0.7 cmv_negative: 0.3YAML specification:

my_random_dataset: format: RandomRepertoireDataset params: repertoire_count: 100 # number of random repertoires to generate sequence_count_probabilities: 10: 0.5 # probability that any of the repertoires would have 10 receptor sequences 20: 0.5 sequence_length_probabilities: 10: 0.5 # probability that any of the receptor sequences would be 10 amino acids in length 12: 0.5 labels: # randomly assigned labels (only useful for simple benchmarking) cmv: True: 0.5 # probability of value True for label cmv to be assigned to any repertoire False: 0.5

RandomSequenceDataset

Returns a SequenceDataset consisting of randomly generated sequences, which can be used for benchmarking purposes. The sequences consist of uniformly chosen amino acids or nucleotides.

Arguments:

sequence_count (int): The number of sequences the SequenceDataset should contain.

length_probabilities (dict): A mapping where the keys correspond to different sequence lengths and the values are the probabilities for choosing each sequence length. For example, to create a random SequenceDataset where 40% of the sequences would be of length 10, and 60% of the sequences would have length 12, this mapping would need to be specified:

10: 0.4 12: 0.6labels (dict): A mapping that specifies randomly chosen labels to be assigned to the sequences. One or multiple labels can be specified here. The keys of this mapping are the labels, and the values consist of another mapping between label classes and their probabilities. For example, to create a random SequenceDataset with the label cmv_epitope where 70% of the sequences has class binding and the remaining 30% has class not_binding, the following mapping should be specified:

cmv_epitope: binding: 0.7 not_binding: 0.3YAML specification:

my_random_dataset: format: RandomSequenceDataset params: sequence_count: 100 # number of random sequences to generate length_probabilities: 14: 0.8 # 80% of all generated sequences for all sequences will have length 14 15: 0.2 # 20% of all generated sequences across all sequences will have length 15 labels: epitope1: # label name True: 0.5 # 50% of the sequences will have class True False: 0.5 # 50% of the sequences will have class False epitope2: # next label with classes that will be assigned to sequences independently of the previous label or other parameters 1: 0.3 # 30% of the generated sequences will have class 1 0: 0.7 # 70% of the generated sequences will have class 0

SingleLineReceptor

Imports data from a tabular file (where each line contains a pair of immune receptor sequences) into a ReceptorDataset. If you instead want to import a ReceptorDataset from a tabular file that contains one receptor sequence per line, see Generic import.

Arguments:

path (str): Required parameter. This is the path to a directory with files to import.

receptor_chains (str): Required parameter. Determines which pair of chains to import for each Receptor. Valid values for receptor_chains are: TRA_TRB, TRG_TRD, IGH_IGL, IGH_IGK.

import_empty_nt_sequences (bool): imports sequences which have an empty nucleotide sequence field; can be True or False. By default, import_empty_nt_sequences is set to True.

import_empty_aa_sequences (bool): imports sequences which have an empty amino acid sequence field; can be True or False; for analysis on amino acid sequences, this parameter should be False (import only non-empty amino acid sequences). By default, import_empty_aa_sequences is set to False.

region_type (str): Which part of the sequence to import. When IMGT_CDR3 is specified, immuneML assumes the IMGT junction (including leading C and trailing Y/F amino acids) is used in the input file, and the first and last amino acids will be removed from the sequences to retrieve the IMGT CDR3 sequence. Specifying any other value will result in importing the sequences as they are. Valid values are IMGT_CDR1, IMGT_CDR2, IMGT_CDR3, IMGT_FR1, IMGT_FR2, IMGT_FR3, IMGT_FR4, IMGT_JUNCTION, FULL_SEQUENCE.

column_mapping (dict): A mapping where the keys are the column names in the input file, and the values must be mapped to the following fields: <chain>_amino_acid_sequence, <chain>_nucleotide_sequence, <chain>_v_gene, <chain>_j_gene, identifier, epitope. The possible names that can be filled in for <chain> are: ALPHA, BETA, GAMMA, DELTA, HEAVY, LIGHT, KAPPA. Any column namme other than the sequence, v/j genes and identifier will be set as metadata fields to the Receptors, and can subsequently be used as labels in immuneML instructions. For TCR alpha-beta receptor import, a column mapping could for example look like this:

cdr3_a_aa: alpha_amino_acid_sequence cdr3_b_aa: beta_amino_acid_sequence cdr3_a_nucseq: alpha_nucleotide_sequence cdr3_b_nucseq: beta_nucleotide_sequence v_a_gene: alpha_v_gene v_b_gene: beta_v_gene j_a_gene: alpha_j_gene j_b_gene: beta_j_gene clone_id: identifier epitope: epitope # metadata fieldcolumn_mapping_synonyms (dict): This is a column mapping that can be used if a column could have alternative names. The formatting is the same as column_mapping. If some columns specified in column_mapping are not found in the file, the columns specified in column_mapping_synonyms are instead attempted to be loaded.

columns_to_load (list): Optional; specifies which columns to load from the input file. This may be useful if the input files contain many unused columns. If no value is specified, all columns are loaded.

separator (str): Required parameter. Column separator, for example “t” or “,”.

organism (str): The organism that the receptors came from. This will be set as a parameter in the ReceptorDataset object.

YAML specification:

my_receptor_dataset: format: SingleLineReceptor params: path: path/to/files/ receptor_chains: TRA_TRB # what chain pair to import separator: "\t" # column separator import_empty_nt_sequences: True # keep sequences even though the nucleotide sequence might be empty import_empty_aa_sequences: False # filter out sequences if they don't have sequence_aa set region_type: IMGT_CDR3 # what part of the sequence to import columns_to_load: # which subset of columns to load from the file - subject - epitope - count - v_a_gene - j_a_gene - cdr3_a_aa - v_b_gene - j_b_gene - cdr3_b_aa - clone_id column_mapping: # column mapping file: immuneML cdr3_a_aa: alpha_amino_acid_sequence cdr3_b_aa: beta_amino_acid_sequence cdr3_a_nucseq: alpha_nucleotide_sequence cdr3_b_nucseq: beta_nucleotide_sequence v_a_gene: alpha_v_gene v_b_gene: beta_v_gene j_a_gene: alpha_j_gene j_b_gene: beta_j_gene clone_id: identifier epitope: epitope organism: mouse

TenxGenomics

Imports data from the 10x Genomics Cell Ranger analysis pipeline into a Repertoire-, Sequence- or ReceptorDataset. RepertoireDatasets should be used when making predictions per repertoire, such as predicting a disease state. SequenceDatasets or ReceptorDatasets should be used when predicting values for unpaired (single-chain) and paired immune receptors respectively, like antigen specificity.

The files that should be used as input are named ‘Clonotype consensus annotations (CSV)’, as described here: https://support.10xgenomics.com/single-cell-vdj/software/pipelines/latest/output/annotation#consensus

Note: by default the 10xGenomics field ‘umis’ is used to define the immuneML field counts. If you want to use the 10x Genomics field reads instead, this can be changed in the column_mapping (set reads: counts). Furthermore, the 10xGenomics field clonotype_id is used for the immuneML field cell_id.

Arguments:

path (str): This is the path to a directory with 10xGenomics files to import. By default path is set to the current working directory.

is_repertoire (bool): If True, this imports a RepertoireDataset. If False, it imports a SequenceDataset or ReceptorDataset. By default, is_repertoire is set to True.

metadata_file (str): Required for RepertoireDatasets. This parameter specifies the path to the metadata file. This is a csv file with columns filename, subject_id and arbitrary other columns which can be used as labels in instructions. For setting Sequence- or ReceptorDataset metadata, metadata_file is ignored, see metadata_column_mapping instead.

paired (str): Required for Sequence- or ReceptorDatasets. This parameter determines whether to import a SequenceDataset (paired = False) or a ReceptorDataset (paired = True). In a ReceptorDataset, two sequences with chain types specified by receptor_chains are paired together based on the identifier given in the 10xGenomics column named ‘clonotype_id’.

receptor_chains (str): Required for ReceptorDatasets. Determines which pair of chains to import for each Receptor. Valid values are TRA_TRB, TRG_TRD, IGH_IGL, IGH_IGK. If receptor_chains is not provided, the chain pair is automatically detected (only one chain pair type allowed per repertoire).

import_illegal_characters (bool): Whether to import sequences that contain illegal characters, i.e., characters that do not appear in the sequence alphabet (amino acids including stop codon ‘*’, or nucleotides). When set to false, filtering is only applied to the sequence type of interest (when running immuneML in amino acid mode, only entries with illegal characters in the amino acid sequence are removed). By default import_illegal_characters is False.

import_empty_nt_sequences (bool): imports sequences which have an empty nucleotide sequence field; can be True or False. By default, import_empty_nt_sequences is set to True.

import_empty_aa_sequences (bool): imports sequences which have an empty amino acid sequence field; can be True or False; for analysis on amino acid sequences, this parameter should be False (import only non-empty amino acid sequences). By default, import_empty_aa_sequences is set to False.

region_type (str): Which part of the sequence to import. By default, this value is set to IMGT_CDR3. This means the first and last amino acids are removed from the CDR3 sequence, as 10xGenomics uses IMGT junction as CDR3. Specifying any other value will result in importing the sequences as they are. Valid values are IMGT_CDR1, IMGT_CDR2, IMGT_CDR3, IMGT_FR1, IMGT_FR2, IMGT_FR3, IMGT_FR4, IMGT_JUNCTION, FULL_SEQUENCE.

column_mapping (dict): A mapping from 10xGenomics column names to immuneML’s internal data representation. For 10xGenomics, this is by default set to:

cdr3: sequence_aas cdr3_nt: sequences v_gene: v_genes j_gene: j_genes umis: counts chain: chains clonotype_id: cell_ids consensus_id: sequence_identifiersA custom column mapping can be specified here if necessary (for example; adding additional data fields if they are present in the 10xGenomics file, or using alternative column names). Valid immuneML fields that can be specified here are [‘sequence_aas’, ‘sequences’, ‘v_genes’, ‘j_genes’, ‘v_subgroups’, ‘j_subgroups’, ‘v_alleles’, ‘j_alleles’, ‘chains’, ‘counts’, ‘frame_types’, ‘sequence_identifiers’, ‘cell_ids’].

column_mapping_synonyms (dict): This is a column mapping that can be used if a column could have alternative names. The formatting is the same as column_mapping. If some columns specified in column_mapping are not found in the file, the columns specified in column_mapping_synonyms are instead attempted to be loaded. For 10xGenomics format, there is no default column_mapping_synonyms.

metadata_column_mapping (dict): Specifies metadata for Sequence- and ReceptorDatasets. This should specify a mapping similar to column_mapping where keys are 10xGenomics column names and values are the names that are internally used in immuneML as metadata fields. These metadata fields can be used as prediction labels for Sequence- and ReceptorDatasets. For 10xGenomics format, there is no default metadata_column_mapping. For setting RepertoireDataset metadata, metadata_column_mapping is ignored, see metadata_file instead.

separator (str): Column separator, for 10xGenomics this is by default “,”.

YAML specification:

my_10x_dataset: format: 10xGenomics params: path: path/to/files/ is_repertoire: True # whether to import a RepertoireDataset metadata_file: path/to/metadata.csv # metadata file for RepertoireDataset paired: False # whether to import SequenceDataset (False) or ReceptorDataset (True) when is_repertoire = False receptor_chains: TRA_TRB # what chain pair to import for a ReceptorDataset metadata_column_mapping: # metadata column mapping 10xGenomics: immuneML for SequenceDataset tenx_column_name1: metadata_label1 tenx_column_name2: metadata_label2 import_illegal_characters: False # remove sequences with illegal characters for the sequence_type being used import_empty_nt_sequences: True # keep sequences even though the nucleotide sequence might be empty import_empty_aa_sequences: False # filter out sequences if they don't have sequence_aa set # Optional fields with 10xGenomics-specific defaults, only change when different behavior is required: separator: "," # column separator region_type: IMGT_CDR3 # what part of the sequence to import column_mapping: # column mapping 10xGenomics: immuneML cdr3: sequence_aas cdr3_nt: sequences v_gene: v_genes j_gene: j_genes umis: counts chain: chains clonotype_id: cell_ids consensus_id: sequence_identifiers

VDJdb

Imports data in VDJdb format into a Repertoire-, Sequence- or ReceptorDataset. RepertoireDatasets should be used when making predictions per repertoire, such as predicting a disease state. SequenceDatasets or ReceptorDatasets should be used when predicting values for unpaired (single-chain) and paired immune receptors respectively, like antigen specificity.

Arguments:

path (str): This is the path to a directory with VDJdb files to import. By default path is set to the current working directory.

is_repertoire (bool): If True, this imports a RepertoireDataset. If False, it imports a SequenceDataset or ReceptorDataset. By default, is_repertoire is set to True.

metadata_file (str): Required for RepertoireDatasets. This parameter specifies the path to the metadata file. This is a csv file with columns filename, subject_id and arbitrary other columns which can be used as labels in instructions. For setting Sequence- or ReceptorDataset metadata, metadata_file is ignored, see metadata_column_mapping instead.

paired (str): Required for Sequence- or ReceptorDatasets. This parameter determines whether to import a SequenceDataset (paired = False) or a ReceptorDataset (paired = True). In a ReceptorDataset, two sequences with chain types specified by receptor_chains are paired together based on the identifier given in the VDJdb column named ‘complex.id’.

receptor_chains (str): Required for ReceptorDatasets. Determines which pair of chains to import for each Receptor. Valid values are TRA_TRB, TRG_TRD, IGH_IGL, IGH_IGK. If receptor_chains is not provided, the chain pair is automatically detected (only one chain pair type allowed per repertoire).

import_illegal_characters (bool): Whether to import sequences that contain illegal characters, i.e., characters that do not appear in the sequence alphabet (amino acids including stop codon ‘*’, or nucleotides). When set to false, filtering is only applied to the sequence type of interest (when running immuneML in amino acid mode, only entries with illegal characters in the amino acid sequence are removed). By default import_illegal_characters is False.

import_empty_nt_sequences (bool): imports sequences which have an empty nucleotide sequence field; can be True or False. By default, import_empty_nt_sequences is set to True.

import_empty_aa_sequences (bool): imports sequences which have an empty amino acid sequence field; can be True or False; for analysis on amino acid sequences, this parameter should be False (import only non-empty amino acid sequences). By default, import_empty_aa_sequences is set to False.

region_type (str): Which part of the sequence to import. By default, this value is set to IMGT_CDR3. This means the first and last amino acids are removed from the CDR3 sequence, as VDJdb uses IMGT junction as CDR3. Specifying any other value will result in importing the sequences as they are. Valid values are IMGT_CDR1, IMGT_CDR2, IMGT_CDR3, IMGT_FR1, IMGT_FR2, IMGT_FR3, IMGT_FR4, IMGT_JUNCTION, FULL_SEQUENCE.

column_mapping (dict): A mapping from VDJdb column names to immuneML’s internal data representation. For VDJdb, this is by default set to:

V: v_alleles J: j_alleles CDR3: sequence_aas complex.id: sequence_identifiers Gene: chainsA custom column mapping can be specified here if necessary (for example; adding additional data fields if they are present in the VDJdb file, or using alternative column names). Valid immuneML fields that can be specified here are [‘sequence_aas’, ‘sequences’, ‘v_genes’, ‘j_genes’, ‘v_subgroups’, ‘j_subgroups’, ‘v_alleles’, ‘j_alleles’, ‘chains’, ‘counts’, ‘frame_types’, ‘sequence_identifiers’, ‘cell_ids’].

column_mapping_synonyms (dict): This is a column mapping that can be used if a column could have alternative names. The formatting is the same as column_mapping. If some columns specified in column_mapping are not found in the file, the columns specified in column_mapping_synonyms are instead attempted to be loaded. For VDJdb format, there is no default column_mapping_synonyms.

metadata_column_mapping (dict): Specifies metadata for Sequence- and ReceptorDatasets. This should specify a mapping where keys are VDJdb column names and values are the names that are internally used in immuneML as metadata fields. For VDJdb format, this parameter is by default set to:

Epitope: epitope Epitope gene: epitope_gene Epitope species: epitope_speciesThis means that epitope, epitope_gene and epitope_species can be specified as prediction labels for Sequence- and ReceptorDatasets. Custom metadata labels can be defined here as well. For setting RepertoireDataset metadata, metadata_column_mapping is ignored, see metadata_file instead.

separator (str): Column separator, for VDJdb this is by default “t”.

YAML specification:

my_vdjdb_dataset: format: VDJdb params: path: path/to/files/ is_repertoire: True # whether to import a RepertoireDataset metadata_file: path/to/metadata.csv # metadata file for RepertoireDataset paired: False # whether to import SequenceDataset (False) or ReceptorDataset (True) when is_repertoire = False receptor_chains: TRA_TRB # what chain pair to import for a ReceptorDataset import_illegal_characters: False # remove sequences with illegal characters for the sequence_type being used import_empty_nt_sequences: True # keep sequences even though the nucleotide sequence might be empty import_empty_aa_sequences: False # filter out sequences if they don't have sequence_aa set # Optional fields with VDJdb-specific defaults, only change when different behavior is required: separator: "\t" # column separator region_type: IMGT_CDR3 # what part of the sequence to import column_mapping: # column mapping VDJdb: immuneML V: v_genes J: j_genes CDR3: sequence_aas complex.id: sequence_identifiers Gene: chains metadata_column_mapping: # metadata column mapping VDJdb: immuneML Epitope: epitope Epitope gene: epitope_gene Epitope species: epitope_species

Simulation

Motif

Class describing motifs where each motif is defined by a seed and a way of creating specific instances of the motif (instantiation_strategy);

When instantiation_strategy is set, specific motif instances will be produced by calling instantiate_motif(seed) method of instantiation_strategy

Arguments:

seed (str): An amino acid sequence that represents the basic motif seed. All implanted motifs correspond to the seed, or a modified version thereof, as specified in it’s instantiation strategy. If this argument is set, seed_chain1 and seed_chain2 arguments are not used.

instantiation (